Workshop

COLM 2025

Before you <think>, monitor

There's a 20% performance penalty when AI systems start solving problems the wrong way — and once they're on the wrong track, they rarely recover. The solution seems obvious: think before you act. After months developing a mathematical theory of metacognition, we couldn't resist testing it. One weekend, one H100 GPU, and way too much caffeine later, this is what happened when we took our metacognition theory off the page and into the code.

The Obvious Next Step

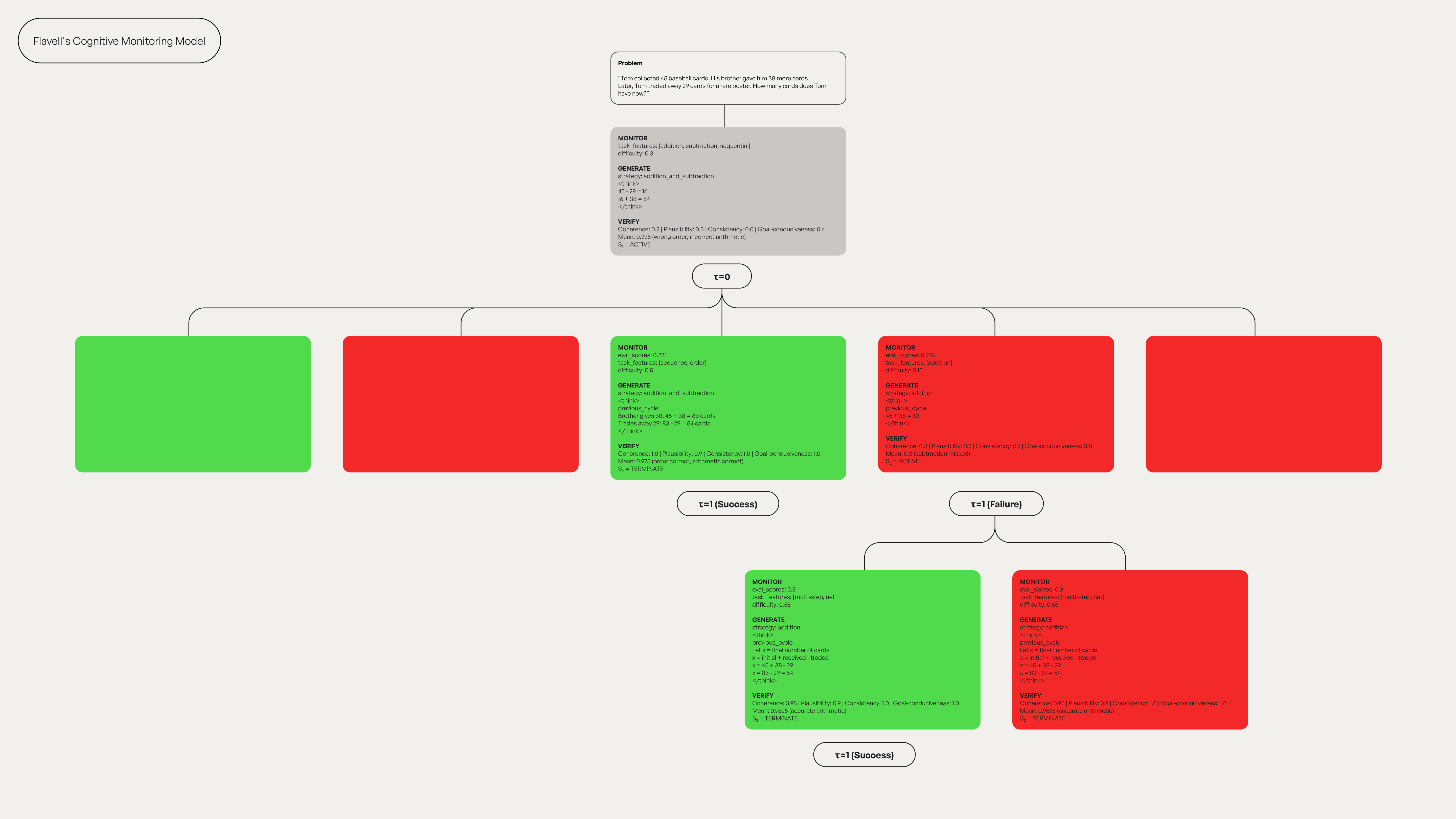

We'd spent months turning Flavell's and Nelson & Narens' psychological insights into mathematical specifications. The MGV framework looked beautiful on paper — Monitor-Generate-Verify, all neatly specified in LaTeX. But there's a special kind of anxiety that comes with staring at your own theoretical work. Do the equations actually mean anything? The natural question wasn't whether to implement it, but how quickly we could try.

The plan was simple. Take Flavell's cognitive monitoring model and see if we could actually make it work. No fancy architectures. No million-dollar compute budgets. Just us, a single H100 GPU, and the kind of nervous excitement that defines experimental research.

From Psychology to Python

Weekend. One H100. A theory from 1979. What could go wrong?

Flavell said metacognition needs three ingredients: know yourself, know the task, know your tools. Humans build this over years. We had 48 hours and too much caffeine.

So we cheated. We borrowed a pre-compiled strategy catalog from Didolkar et al. (2024), which included 20 problem-solving approaches already mapped to GSM8K-style problems. It's like giving someone a chess opening book instead of letting them discover the patterns themselves. Not ideal, but good enough for a proof of concept.

Our implementation ended up with three distinct phases, each requiring explicit prompt engineering:

- MONITOR: The model examines the problem without attempting solution. Pure pattern recognition, assigning difficulty scores and identifying mathematical concepts.

- GENERATE: Selects appropriate strategy and generates solutions with adaptive compute budget. Calm problems get baseline resources. Difficult problems get more computational power (Silicon Valley's solution to everything).

- VERIFY: Evaluates the solution along Flavell's four cognitive dimensions (coherence, plausibility, consistency, goal-conduciveness). Each dimension a different flavor of self-doubt. Failing grade triggers another attempt with a different strategy.

Zero fine-tuning. Zero architecture changes. Just prompt orchestration held together with string formatting and temperature adjustments. But it captured something essential from Flavell's theory: the rhythm of cognitive monitoring, where assessment precedes action and evaluation guides revision.

The GSM8K Experiment

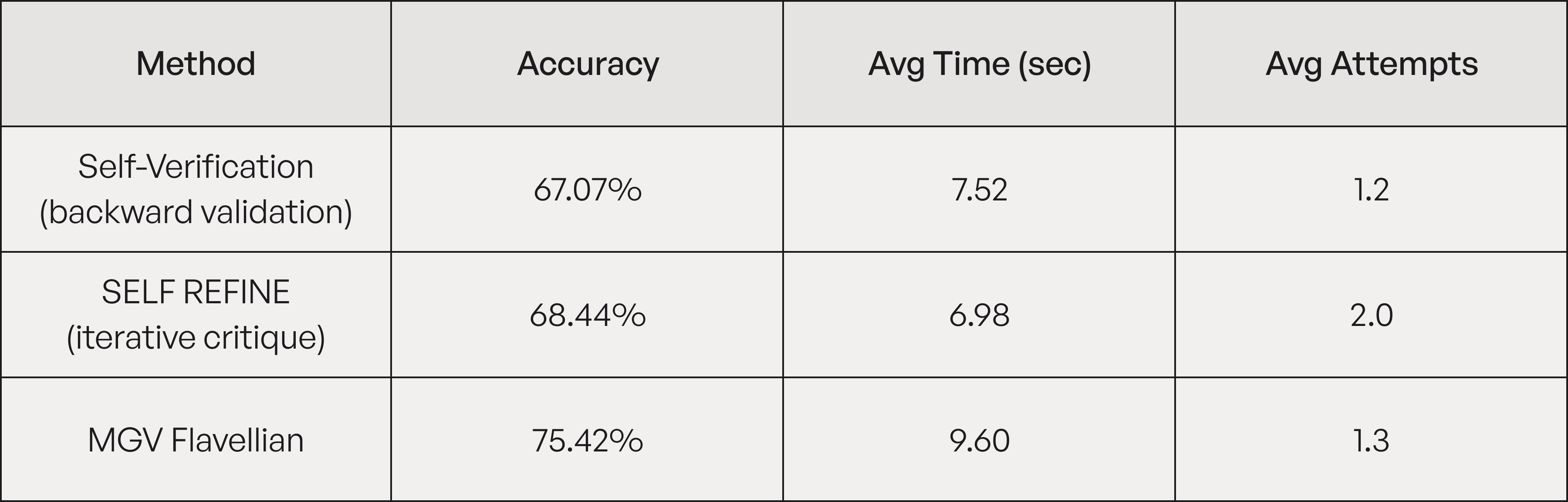

We tested on 659 problems from GSM8K grade school math problems, because if you're going to fail, fail on something well-benchmarked.

The 7-point accuracy bump is nice, but the interesting bit is how we got there. Think of Self-Refinement like a student who rushes through a problem, checks their work, and tries again if wrong — averaging 2 attempts. MGV is more like the student who reads carefully, plans their approach, then solves — needing only 1.3 attempts. Yes, MGV spends more time thinking upfront, but it saves time by getting it right the first time.

Let's Think Slow and Prosper

Did we just get lucky on GSM8K? Maybe. Our implementation was admittedly crude — borrowed strategies, explicit prompting, one benchmark, one weekend. This wasn't rigorous science. It was a proof of concept held together with caffeine.

But the results point to something the field may have been missing in its rush to engineer solutions.

Current approaches split into two camps. Generate-Verify systems (like SELF-REFINE) draft solutions then edit them — elegant, but once you start wrong, you rarely recover. Luo et al. quantified this: bad starts cause 20% performance penalties that persist through refinement. Monitor-Generate approaches (like Plan-and-Solve) think before acting — smarter, but they can't tell if their strategies actually worked.

Everyone discovered pieces of the puzzle. Pre-thinking helps. Verification matters. But implementations felt like engineering hunches. How should models structure their monitoring? What makes good verification? The field was rediscovering cognitive principles through trial and error.

We had working hacks but no theory.

So we tried something different. Instead of being inspired by human reasoning, we implemented it. Flavell's 1979 framework doesn't just say “monitor before acting” — it specifies what to monitor (difficulty, strategies, confidence), when (before, during, after), and how monitoring affects generation. We translated those specifications directly into prompts.

What really excites us is the next step. Imagine what happens when models learn their own strategies through experience. When they don't need to be told to check their confidence — they just feel it in their weights. When uncertainty isn't a prompted question but an emergent property. When machines genuinely know what they don't know.

48 hours. 45-year-old theory. 7-point improvement. Sometimes the past is the future.

“Before you <think>, monitor” demonstrates what happens when theoretical frameworks meet weekend engineering. Read the full paper for implementation details that actually work.

Built with caffeine and curiosity at socius: Experimental Intelligence Lab.